This blog is a summary of the research paper Sah, Sudhakar, et al. "MCUBench A Benchmark of Tiny Object Detectors on MCUs." Proceedings of the IEEE/CVF European Conference on Computer Vision 2024, accepted at the ECCV 2024 CADL Workshop. You can find the CADL Workshop presentation here.

In the rapidly evolving field of computer vision, object detection plays a crucial role in applications ranging from self-driving cars to security systems. However, deploying these advanced models on resource-constrained devices like Microcontroller Units (MCUs) presents unique challenges. Enter MCUBench, a comprehensive benchmark designed to evaluate the performance of tiny object detectors on MCUs.

What is MCUBench?

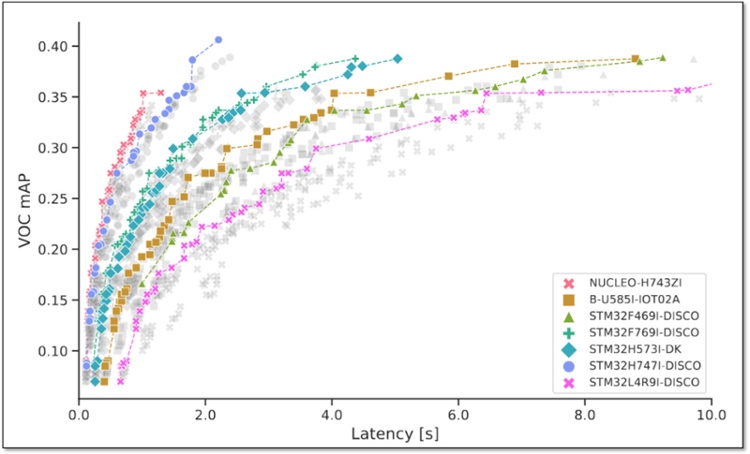

MCUBench is a benchmark featuring over 100 tiny YOLO-based object detection models evaluated on the VOC and COCO dataset across seven different MCUs. This benchmark provides detailed data on mean average precision (mAP), latency, RAM, and Flash usage for various input resolutions for YOLO-based detectors. By conducting a controlled comparison with a fixed training pipeline and head but varying backbone, neck and resolution, MCUBench collects comprehensive performance metrics.

Key Contributions

Comprehensive Benchmark: MCUBench includes over 100 tiny YOLO-based object detection models specifically designed for MCU-grade hardware. These models are trained on the VOC/COCOdataset and selected through Pareto analysis across four different MCU platforms.

Performance Insights: The benchmark demonstrates that integrating modern detection heads and training techniques allows various YOLO architectures, including older models like YOLOv3, to achieve an excellent mean Average Precision (mAP)-latency tradeoff on MCUs.

Model Selection Tool: MCUBench provides trained weights of the models, enabling application developers to select and fine-tune models based on their specific average precision, latency, RAM, and Flash trade-offs, without the need to train multiple models independently.

Methodology

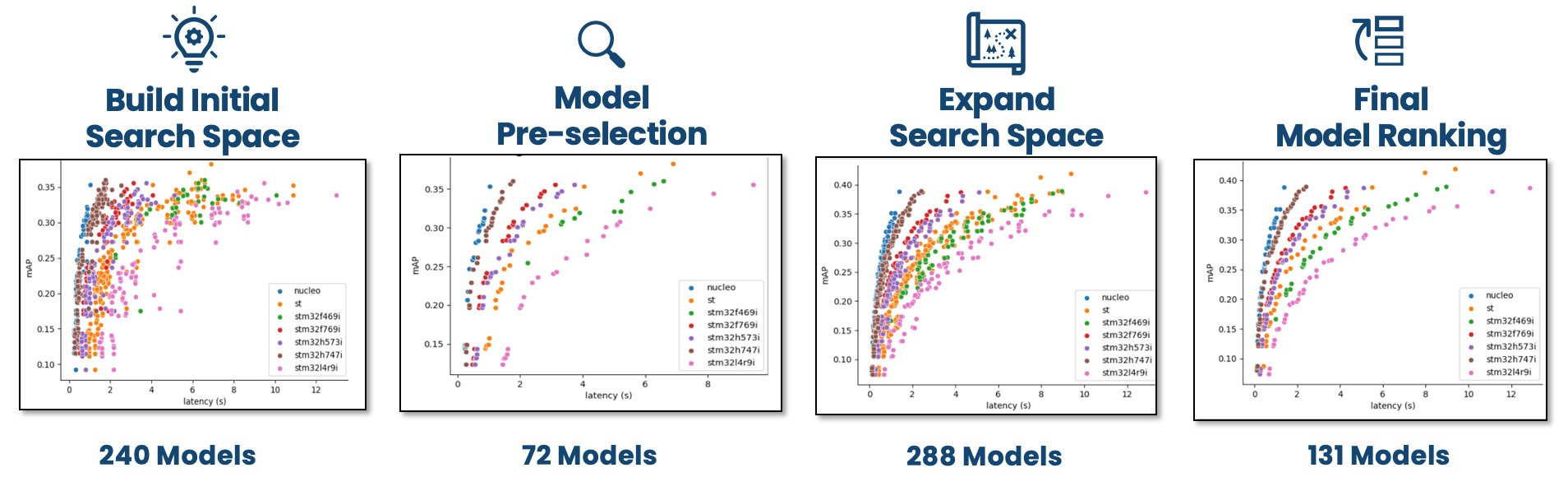

The benchmark process involves several steps:

- Initial Model Training: Models are trained on the Pascal VOC/COCO dataset and evaluated on seven different Nucleo boards from STMicroelectronics.

- Model Pre-selection: Pareto-optimal models are selected based on their VOC/COCO mAP and on-device latency.

- Expanded Search Space: Selected models are fine-tuned at different resolutions to generate a final set of Pareto-optimal models.

- Final Model Ranking: Models are evaluated on seven different MCUs to build new Pareto curves, highlighting the trade-offs between mAP and latency.

Hardware Benchmarking

MCUBench evaluates models on a diverse range of MCUs, including the STM32H7, STM32F4, STM32F7, and STM32L4 series. Each MCU offers different configurations of RAM, Flash, processor type, and clock frequency, allowing for a comprehensive analysis of performance across various hardware platforms.

Results

The benchmarking procedure revealed interesting characteristics of YOLO-based models on different MCUs. For instance, older YOLO architectures, when equipped with modern detection heads, can outperform newer models on MCUs. The results also highlighted the importance of optimizing resolution and width for enhancing model performance on constrained devices.

Conclusion

MCUBench is a valuable tool for benchmarking the MCU performance of contemporary object detectors. It aids in model selection based on specific constraints, providing a clear understanding of the trade-offs between accuracy, latency, RAM, and Flash usage. This benchmark can significantly benefit developers looking to deploy efficient object detection models on low-footprint devices.

Want to benchmark your MCU?

Contact us at info@deeplite.ai and we will get you set up!

I hope you enjoyed this blog summary of the article. Please let me know if you have any questions or feedback. 😊