As discussed in our previous blog post, compressing large deep learning models will pave the way for many new AI applications and enable AI to assist our everyday lives. This makes model compression a hot research area, provoking multiple intense online discussions, including many blogs and media articles about various optimization use-cases and compression results.

Some of the popular AI conferences such as NeurIPS 2020 and CVPR 2020 have each accepted over 50 research papers in deep learning model compression. In ICLR 2021, there are more than 100 papers submitted. This means that over 200 new research papers in deep learning model compression have been published in 2020 alone.

Does this make deep learning model compression a solved problem? Unfortunately, no.

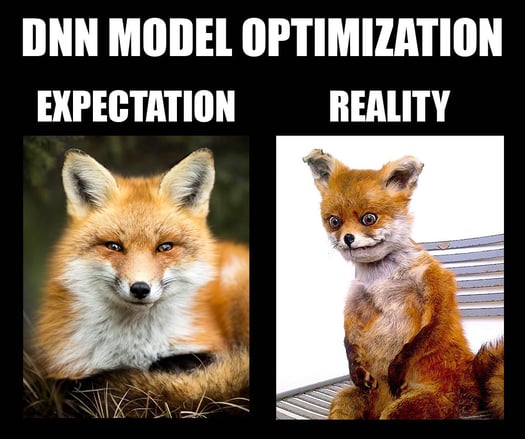

Industry professionals expect that model compression can be served as an off-the-shelf solution in production. However, there is a BIG GAP between what works in research and what works in production. Research papers on model compression do not translate to successful results on multiple datasets, multiple tasks such as classification, object detection, or translation, and even on multiple domains such as images, text, or audio. Many existing DNN optimization solution approaches are designed, keeping in mind popular deep learning architectures, such as ResNet, DenseNet, or BERT against existing benchmark datasets such as CIFAR, ImageNet, etc. When these approaches are used in a noisy custom dataset in the real world or used in a custom deep learning model, the optimization techniques tend to perform poorly compared to what is reported in the research papers.

Despite numerous articles available, the Deeplite team’s expertise is often put to the test with many open-ended questions about the model compression process from partners, collaborators, clients, and investors.

In this blog series, we gathered all these uncertainties and misinformed opinions to provide our honest and practical answers to them. We hope this will provide more clarity around the misconceptions of deep learning model compression.

Deep learning model compression is very complicated. In our previous blog post, we talked about some key methods for performing compression. As promising as it sounds today, it has many challenges and roadblocks to be used in a production environment or a real-world application. Let's go over some of these challenges.

Today's results that can be achieved by pruning the unimportant weight values in a model deliver more than 90% sparsity. It means that only 10% of the weights are sufficient to use the model. One could assume that the model will also be compressed to 10% of its original size.

In practice, unstructured weight pruning is a pseudo-compression approach and does not reduce the size or computational flops of the model. Instead, it replaces unwanted weight values with zeros. Thus, the number of computations performed or the size of the models remains the same. To actually compress the model, the existing libraries, such as PyTorch or Tensorflow, should have a `SparseConvolutional` (hypothetical) layer to perform sparse matrix computation in an optimized way. And to achieve that, the underlying hardware should support sparse matrix multiplication.

In theory, channel pruning (or layer pruning) removes either a few channels from a layer or the entire layer itself from a model. In practice, there are two challenges. First, in a very complex directed acyclic graph (DAG) model architecture having bypass connections, pruning a few channels in a layer must be propagated to the branches. That is a complex task to be generalized for any black-box model. Second, pruning channels are susceptible to the accuracy of the model. Thus, it is challenging to prune the channels of a model without severely reducing the real-world accuracy of the model.

In theory, weight decomposition reduces the number of model parameters by doing matrix factorization. However, decomposing one layer in a model introduces three to four new layers in a model in practice. Though the number of computations in the model reduces, the number of layers in the model increases. This increases the overall memory footprint of the model and might reduce the throughput of the model in real-life applications.

Weight quantization reduces the precision of weight values instead of removing the weights. In theory, sometimes the reduction is done to the extent of 2-bit precision, diminishing 32-bit values to 2-bit values and compressing the model 16x times.

In practice, the underlying library (PyTorch, Tensorflow, etc.) and the hardware doesn’t always support low-bit precision processing. For example, the popular `cuda toolkit` for NVIDIA GPUs performs only 32-bit processing. So, a 2-bit matrix is typecasted to a 32-bit value, thus killing quantization and compression outcomes.

Tensorflow performs two types of optimization (compression) for a deep learning model:

We already talked about unstructured weight pruning and its challenges in the previous question. So, let’s concentrate on the 16-bit weight quantization (FP-16). Although in theory, 16-bit weight quantization (FP-16) reduces the model size by half, it can be applied in practice only if the underlying hardware supports 16-bit processing. As of today, a lot of hardware does not support off-the-shelf 16-bit processing.

To add to that, TensorFlow Lite also provides INT8 quantization, which fits well only a limited number of architectures. Both INT8 and FP-16 quantizations on TensorRT are dependent on hardware support. Effectively, the quantization provided by Tensorflow or TensorRT can be used successfully in specific hardware architectures, but not all.

Weight pruning or channel pruning is one of the ways for achieving model compression. By applying weight pruning on DNNs, we are removing layers or channels from a layer that often severely affects the model's accuracy. In production environments, the goal is commonly to compress the model but lose as little accuracy as possible, sometimes less than 1%.

Thus, channel pruning is difficult to apply in production. Figuring out which channels to remove and which channels to retain is extremely hard. The design space (set of all possible models) is so large that finding out a pruned architecture is classified as an NP-hard problem. Which in everyday language means super-duper jumbo hard.

This is probably one of the most common misconceptions in the model optimization industry. Yes, there are many software-only optimization solutions like AutoML or Neural Architecture Search (NAS). But a good DNN optimization implementation always needs appropriate hardware support. Optimization must be done in the overall workflow design and the lifecycle of using models in production. Thus, a healthy combination of software + hardware co-designed optimization along with a smooth and optimized workflow is the best way to deliver deep learning models in production.

At Deeplite, we perform two stages of optimization:

Yes, different optimization methods can be stacked together to produce highly compressed models. In fact, we could theoretically create a perfect symphony of many optimization techniques in synchronization to be applied to the same model.

While the different optimization techniques do not usually conflict with each other, you must always be smart about which methods to combine, on which part of the model do you use each of them, and in what order do you use them. At Deeplite, we combine different methods of optimization to produce a highly compressed and robust model, with a negligible reduction in model performance.

Neural Architecture Search (NAS) or AutoML can be a real pain to work with. Why? Because the search space of all possible models is large. The number of hyperparameters to search for is usually in the hundreds, making NAS feel like an intractable problem taking forever to solve! Additionally, neural networks generated by machines are often opaque and unseemly when interrogated by a human expert. Many recent research papers showed NAS results where searching for a model could take years. For instance, in this example paper (refer to table 2), it takes 69 years of TPUv3 computation to produce the required results.

But there is light at the end of the tunnel! We could perform smart NAS, aka efficient NAS, used to vastly reduce search spaces. In most of the production requirements or real-world problems, a teacher model would provide sufficient accuracy. This teacher model is used as a seed, then a NAS algorithm is used to search in its nearest neighborhood to find a much smaller and compressed model, with equivalent accuracy. Similarly, some cool NAS tricks like attention mechanisms to reduce the overall design space can be performed to bring down the time of a search.

This is a tricky question. The straightforward answer is “Yes!”, optimized deep learning models can be ported from one hardware to another. For example, frameworks such as ONNX support different backend hardware: CPU, GPU, Multi-GPU etc. In PyTorch, if you have a `model`, then a one-line command called `model.cpu()` makes it CPU ready and `model.cuda()` makes it GPU ready. Additionally, model optimization can significantly reduce the number of parameters and on-chip memory required for the model inference, enabling a model to be ported from a high-capacity device to a device with tighter resource constraints, even microcontroller (MCU) level devices.

However, using these models in custom or non-common hardware is tricky and requires a lot of engineering effort to build the necessary software support. This is one of the most interesting areas where DNN model compression can bring the most impact as more hardware becomes available for deep learning inference. We are potentially reaching a trend in compressing and porting existing DL infrastructure stacks from existing hardware to new hardware as new options become commercially available.

Typically, when a model is being compressed by removing certain weights or channels or layers, the model must be retrained (or finetuned, sufficiently) or some distillation process is used to figure out the accuracy of the compressed model. Thus, it is very difficult to gauge the effect of compression in the accuracy drop without training. This has been one of the holy grail problems of deep learning model compression.

We should acknowledge that there has been ongoing research on predicting a model’s performance on a dataset tabula rasa. However, this research is still in its nascent stage and unclear how it will translate to real-world settings. Furthermore, we typically see that training a large model initially to achieve higher baseline accuracy and then applying compression heavily will ultimately result in a more accurate and smaller model than simply trying to train a small initial model on the task dataset.

The throughput of the model in GPU is much less than in an ASIC =/TPU. That is why if we apply the same model compression on ASIC architectures (such as RAM/CPU) and on GPU, we'll probably reach the maximum speedup effect on ASIC, and a lesser impact on latency on GPU.

A decent latency improvement via model compression can also be achieved on other hardware, such as FPGA, ARM CPU or even microcontrollers (MCUs), particularly by enabling a model or multiple models to fit on-chip memory and minimize the cost of data movement for inference.

In theory, the ideal solution is to be able to compress the large deep learning model directly on the edge device. In practice, deep learning model compression is still a *costly* operation and requires sufficient size, computational resources, and time. Thus, most of the software-based and hardware-based compression techniques today are performed on the cloud computing resources and deployed on the edge device post-compression.

Going forward, on-device compression is a really important research problem. The current research works are very nascent and there is a lot of scope for solving some fundamental and challenging problems in this space.

That's all for now! We hope you enjoyed reading this article and found it useful. Would you like us to cover any other topics related to DNN model optimization? Let us know by leaving a comment below. Thank you!

Anush Sankaran

Senior Research Scientist at Deeplite

Medium.com: @anush.sankaran

Twitter: @goodboyanush

Anastasia Hamel

Digital Marketing Manager at Deeplite

Medium.com: @anastasia.hamel

Twitter: @anastasia_hamel